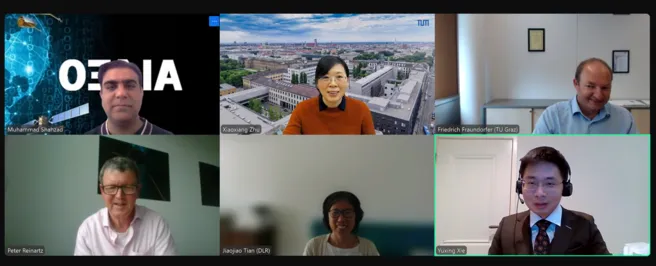

On 13 September, 2024, Yuxing Xie defended his thesis entitled Multimodal Co-learning with VHR Multispectral Imagery and Photogrammetric Data, supervised by Prof. Dr. Xiaoxiang Zhu. The defense committee was chaired by Prof. Dr. Muhammad Shahzad. We particularly appreciate the engagement of the examiners Prof. Dr. Peter Reinartz from the Osnabrück University and Prof. Dr. Friedrich Fraundorfer from the Graz University of Technology!

Congratulations, Dr. Xie!

The rapid development of sensor technology has facilitated unprecedented access to diverse remote sensing data, intertwining numerous fields with remote sensing techniques more closely than ever before. Among these, urban remote sensing stands out as a crucial topic. Meanwhile, as the volume of available data far exceeds manual processing capacities, the development and implementation of automatic processing methodologies have become necessary. As a significant breakthrough for big data analysis, deep learning has brought revolutionary change in remote sensing tasks, achieving remarkable performance on various benchmarks. In most cases, the success of deep learning techniques relies on supervised training with similar data. Therefore, single-modal networks’ performance is restricted when meeting complex scenarios that cannot be fully described by a single modality alone. Benefiting from the potential to integrate complementary advantages of different data modalities, multimodal learning has been recognized as a solution to more challenging tasks. As the most widely applied multimodal approach, data fusion has made progress in several urban remote sensing applications. However, it has two inherent limitations. On the one hand, it necessitates the availability of complete multimodal data even during the testing phase, which poses a challenge for the vast volumes of single-modal data. On the other hand, it cannot utilize intrinsic information of raw heterogeneous data, potentially leading to diminished performance. To avoid the mentioned limitations and utilize the advantages of multimodal information in a more friendly manner, this dissertation conducts a comprehensive investigation on multimodal co-learning, the idea of transferring knowledge between different data modalities while maintaining their independence. Specifically speaking, this dissertation makes the following contributions:

• We conduct comprehensive studies on two essential yet crucial urban remote sensing tasks, building extraction and building change detection, with multispectral orthophotos and corresponding photogrammetric geometric data derived from the same source. We develop and employ proper networks for different modalities depending on the task, including unitemporal images, unitemporal point clouds, bitemporal images, and DSM-derived height difference maps.

• We develop three flexible multimodal co-learning frameworks adapted for different urban remote sensing tasks, which can outperform single-modal learning and circumvent the limitations of conventional data fusion approaches. The first framework enhances the building extraction networks in scenarios with limited labeled data. The second framework enhances the building extraction networks when using crossdomain labeled data. The third framework is for building change detection tasks, which also utilizes cross-domain labeled data. The cornerstone of these frameworks’ success lies in their ability to effectively transfer beneficial knowledge between 2-D spectral modality and 2.5-D/3-D geometric modality, through labeled or unlabeled data pairs.

• Considering the lack of public multimodal urban remote sensing data, we develop a large synthetic dataset that can be utilized to evaluate the algorithms for building extraction, semantic segmentation, and building change detection. We conduct a series of experiments between this synthetic dataset and real datasets. Promising results are achieved by utilizing our proposed multimodal co-learning frameworks. These results not only further demonstrate the capability of our methods, but also provide a potential to employ cost-efficient and annotation-friendly synthetic training data for real urban remote sensing applications.

References:

Xie, Y., Tian, J., & Zhu, X. X. (2020). Linking points with labels in 3D: A review of point cloud semantic segmentation. IEEE Geoscience and remote sensing magazine, 8(4), 38-59.

Xie, Y., Tian, J., & Zhu, X. X. (2023). A co-learning method to utilize optical images and photogrammetric point clouds for building extraction. International Journal of Applied Earth Observation and Geoinformation, 116, 103165.

Reyes, M. F., Xie, Y., Yuan, X., d’Angelo, P., Kurz, F., Cerra, D., & Tian, J. (2023). A 2D/3D multimodal data simulation approach with applications on urban semantic segmentation, building extraction and change detection. ISPRS Journal of Photogrammetry and Remote Sensing, 205, 74-97.

Xie, Y., Yuan, X., Zhu, X. X., & Tian, J. (2024). Multimodal Co-learning for Building Change Detection: A Domain Adaptation Framework Using VHR Images and Digital Surface Models. IEEE Transactions on Geoscience and Remote Sensing.