What is happening? This is a question frequently asked by everyone in their daily life. As to the remote sensing community, figuring out the answer is important for a wide range of applications, e.g., damage estimation and precision agriculture. Thanks to recent advances of Unmanned Aerial Vehicles (UAVs), massive aerial videos are readily accessible and can be used to detect what happens and will happen.

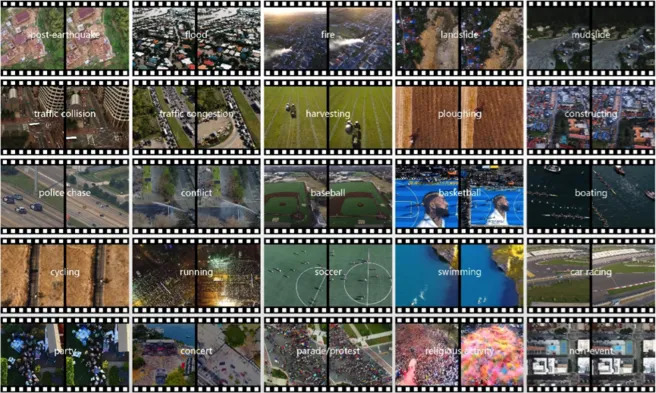

In this study, we introduce a novel problem of event recognition in aerial and present a large-scale, human-annotated dataset, named ERA (Event Recognition in Aerial videos), which consists of 2,864 videos collected from YouTube. All these videos are manually annotated with 24 event classes and 1 non-event class. To offer a benchmark for this task, we extensively validate existing deep networks and report their results in this work. For further details, please refer to this publication. This work is also reported by Synced.